Assessment Results: First Batch

This post originally appeared on the Software Carpentry website.

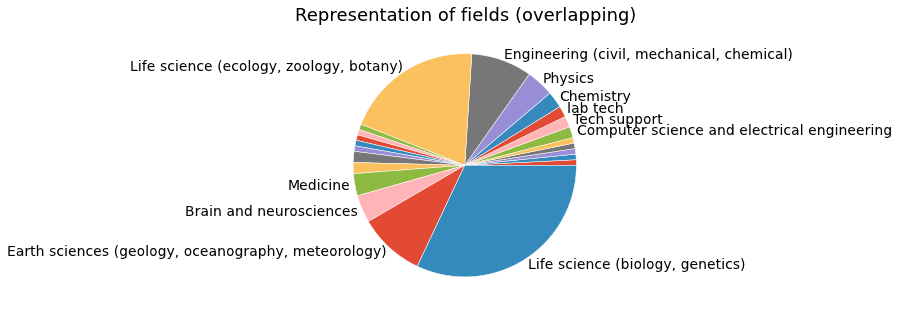

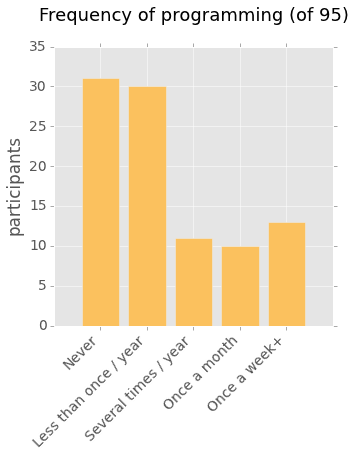

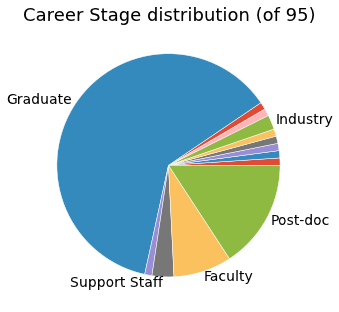

As many of our instructors and participants know Software Carpentry has been giving surveys for a number of months at every possible workshop. This takes a surprising amount of coordination and attention, much of which we owe to our wonderful two administrators: Amy Brown and Arliss Collins. The surveys are being administered before and after each workshop in an effort to gather difference data which could answer questions such as "How useful was this workshop to participants?", "Who benefits most from these workshops?", or "What ways could we improve the workshop?" We can also use this information to ask about the professional demographics such as "What fields are most represented by our participants?" and "What's the typical amount of programming experience?" both of which could help inform a tailored workshop experience to better suit the audience.

I'll share with you the first of some data out of this assessment effort. At least half of the survey is designed to enable participants to self-report how familiar or skilled they think they are given hypothetical tasks for a variety of situations. The surveys measure familiarity and skill for the Shell, Version Control Systems (VCS), Testing, and SQL, which were identified as some of the Software Carpentry core competencies. Right now I'll go over Shell and leave the other three core analyses for a later time. The first question I ask is "How does familiarity and skill for the Shell change over the course of the workshop?"

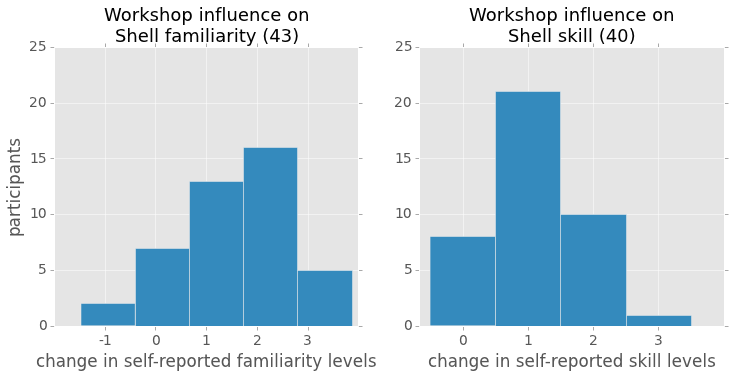

There are four levels of responses for familiarity of the Shell which I'll summarize: "Not familiar," "Familiar in name only," "Familiar but never used," and "Familiar because I use it." The actual choices are more detailed than I describe here. The figure titled "Workshop influence on Shell familiarity" summarizes 43 data points of difference between before and after, showing a positive distribution of relative changes in familiarity levels with the Shell. This is great to see because it means over half the participants improved by 2 or more familiarity levels over the course of the workshop, implying they identified as "now familiar" or "familiar because they're using it now". The number of data points is small because this analysis ignores participants who either started out at the maximum familiarity level, or weren't obviously taught the Shell. It's too bad there seems to be one participant who either marked the survey wrong or felt they had originally rated their familiarity too high, and thus showed up as a negatively directed change.

In the figure "Workshop influence on Shell skill" I ask a more specific question: "How has skill level changed for participants who began the workshop able to use the Shell?" The problem posed was inquiring about how they might go about solving a file filtering by contents problem and the choices were roughly "I can't do it," "Use Find, Copy, & Paste," "Basic Shell commands," "I'd use a pipeline of commands." The results are encouraging that more than half the participants who could use the shell to begin with left the workshop feeling they had a better grasp of applying their knowledge of the Shell to realistic problems.

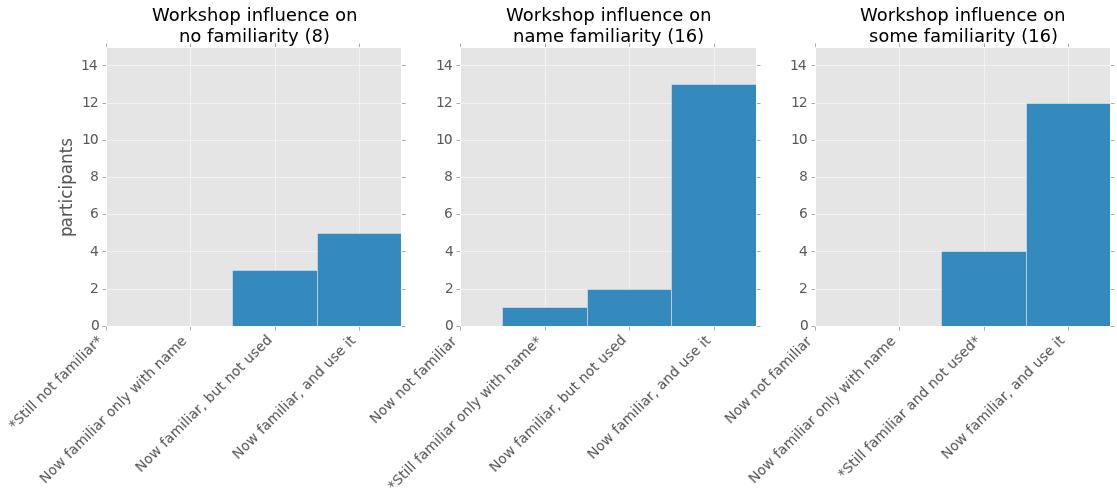

Next I asked "How much did participants improve from each beginning condition for the Shell?" This next figure shows three starting conditions: no familiarity with the shell, name only familiarity, and familiar but never used. Each subfigure shows how participants who began with that condition of familiarity progressed over the workshop. It's encouraging that for each beginning condition nearly everyone improved their familiarity, and the majority of participants improved to the point of being a user.

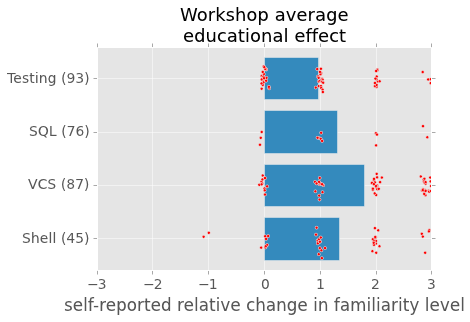

To get an overall impression of participant improvement I asked "What is the direction of relative change in familiarity for each core competency?" On average, familiarity with each core competency increases sometimes greatly. The totals vary for each item because maximum scoring pre-assessment participants are being ignored because their change in level should be zero and they will bias the average. Raw data points have been jittered for viewing purposes.

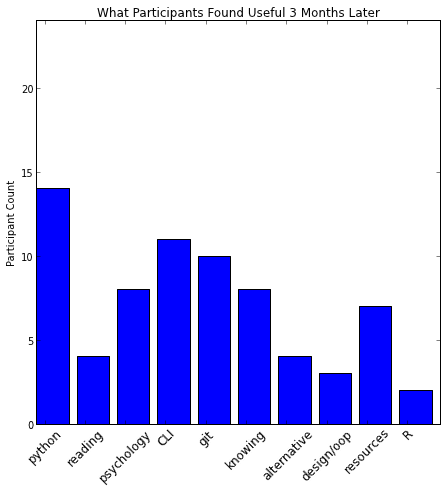

Let's finish off with a figure from assessment interviews. I've so far interviewed 23 participants 3 or more months after their respective Software Carpentry experiences. The following figure quantifies how often previous participants mentioned various Software Carpentry topics being useful, good to know, or important to them. The most notable (python, command line, and git) are tools participants mentioned now using as a direct result of the workshop. Some of the other subjects were not as expected, such as psychology, knowing, and resources. Psychology refers to the useful tidbits like Greg often mentions, such as the optimal size of a function, recommended frequency of taking breaks, and other productivity tips. Knowing is when a participant is glad to know about something such as SQL, but they don't use it, or have any plans to use it in the near future. Resources is when instructors mention useful resources such as an online regular expressions tester, or a good book about python programming. Alternative is when a participant explicitly mentioned it was useful to be shown alternative methods to accomplish tasks they could already perform. Lastly, reading refers to participants who expressed an interest in improving their ability to read other people's code.

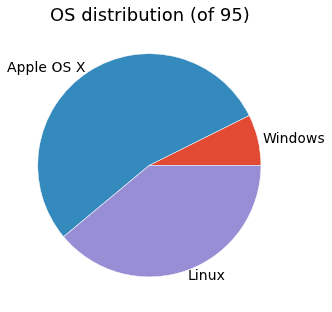

I still have a bit more data to work through, but hopefully this gives you a good idea of what's going on with Software Carpentry effectiveness. I'll leave you with some fun professional demographics of Software Carpentry participants. (Note, there are more fields or career stages than you might expect because people would sometimes put something in 'other' and I haven't done any hand aggregating yet)

Dialogue & Discussion

Comments must follow our Code of Conduct.