Spreadsheets, Retractions, and Bias

This post originally appeared on the Software Carpentry website.

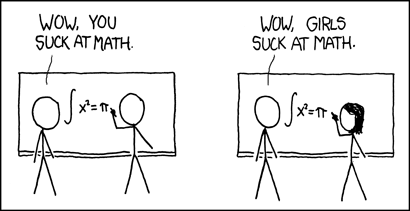

Just in case there's any misunderstanding:

Now, with that out of the way… Guy Deutscher's wonderful book Through the Language Glass devotes several pages to the Matses people of South America:

Their language…compels them to make distinctions of mind-blowing subtlety whenever they report events… [Verbs have] a system of distinctions that linguists call "evidentiality", and as it happens, the Matses system…is the most elaborate that has ever been reported… Whenever Matses speakers use a verb, they are obliged to specify—like the finickiest of lawyers—exactly how they came to know about the facts they are reporting… There are separate verbal forms depending on whether you are reporting direct experience (you saw someone passing by with your own eyes), something inferred from evidence (you saw footprints on the sand), conjecture (people always pass by at that time of day), or hearsay (your neighbor told you he had seen someone passing by). If a statement is reported with the incorrect evidentiality form, it is considered a lie.

I thought about this in the wake of reactions to two reports this week of errors in scientific work. The first was the discovery by Herndon and others of mistakes in Reinhart & Rogoff's widely-quoted analysis of the relationship between debt and growth—mistakes that were due in part to bugs in an Excel spreadsheet. Quite a few of the scientists I follow on Twitter and elsewhere responded by saying, "See? I told you scientists shouldn't use Excel!"

This week's other report didn't get nearly as much attention (which is fair, since it hasn't been used to justify macroeconomic policy decisions that have impoverished millions). But over in Science, Ferrari et al. point out that an incorrect normalization step in a calculation by Conrad et al. produced results that are wrong by three orders of magnitude. What's interesting to me is that none of the comments I've seen about that incident have suggested that scientists shouldn't use R or Perl (or whatever Conrad et al. used—I couldn't see it specified in their paper). It brings to mind this classic XKCD cartoon:

but with spreadsheets on the receiving end of the prejudice.

At this point, I'd like you to take a deep breath and re-read the disclaimer at the start of this post. As I said there, I'm not suggesting that scientists should use Excel. In fact, I've spent a fair bit of the last 15 years teaching them alternatives that I think are better. What I want to point out is that we don't actually have any data about whether people make fewer, the same, or more errors using spreadsheets or programs. The closest we come is Raymond Panko's catalog of the errors people make with spreadsheets (thanks to Andrew Ko for the pointer), but as Mark Guzdial said to me in email, "In general, language-comparison studies (treating Excel as a language here) are really hard to do. How do you compare an expert Excel spreadsheet builder to an expert Scheme programmer to an expert MATLAB programmer? Do they know how to do comparable things at comparable levels?"

It's plausible that spreadsheets are more error-prone because information is more likely to be duplicated, or because they put too much information on the screen (all… those… cells…). It's equally plausible, though, that programs are more error-prone because they hide too much, and overtax people by requiring them to reason about state transformations in order to "see" values. I personally believe that if spreadsheet users do make more errors, it's probably not because of the tool per se, but because they generally know less about computing than people who write code (because you have to learn more in order to get anything done by coding). But if I had to say any of these things in Matses, I'd be obliged to use the verb inflection meaning, "An internally consistent story for which I have no actual proof."

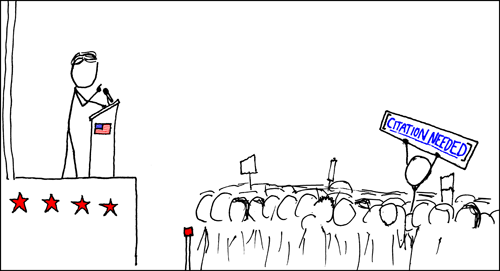

Proof is something we'd like to have more of in Software Carpentry: scientists tend to pay closer attention if you have some, and on the whole, we'd rather teach things that are provably useful than things that aren't. Some people still argue that proving X better than Y is impossible in programming because there's too much variation between different people, but I don't believe them, if only because it's possible to prove that some things work better than others in education. (The study group we run for people who want to become instructors looks at some of this evidence.) Empirical studies of programmers are still fewer and smaller, but we actually do know a few things, and are learning more all the time. If Software Carpentry does nothing else, I'd like it to teach scientists to respond to claims like "spreadsheets are more error-prone than programming" by demanding the evidence: not the anecdote or the "just so" story, but the evidence. Because after all, if we want to live in this world:

shouldn't we put our own house in order first?